The simplest form of client/server communication is: a client (browser) sends a request for a specific resource and the server of the website sends the information back which the browser translates into HTML, CSS, and JavaScript just like what you see on your screen while reading this article, however, there is a big gray area in this send/recieve part between server and client, and this exactly is what I’m going to focus on in this article, what in the network connection that might slow these requrests down? why you as a web developer should care about that? and what should we do in order to optimize it?

Why the Internet is Limited?

The internet is limited due to a lot of reasons that I explained in a previous article (read it from here), but just to summarise, the bandwidth is the main reason why we get a limited internet connection, we are sharing the same available bandwidth and you have to pay a bit more in order to get a bigger bandwidth which leads to faster internet, but unfortunately servers and clients don’t know each others’ max bandwidth capacity (window size), that’s why we have something like slow start and congestion control as I will explain next

Congestion Control

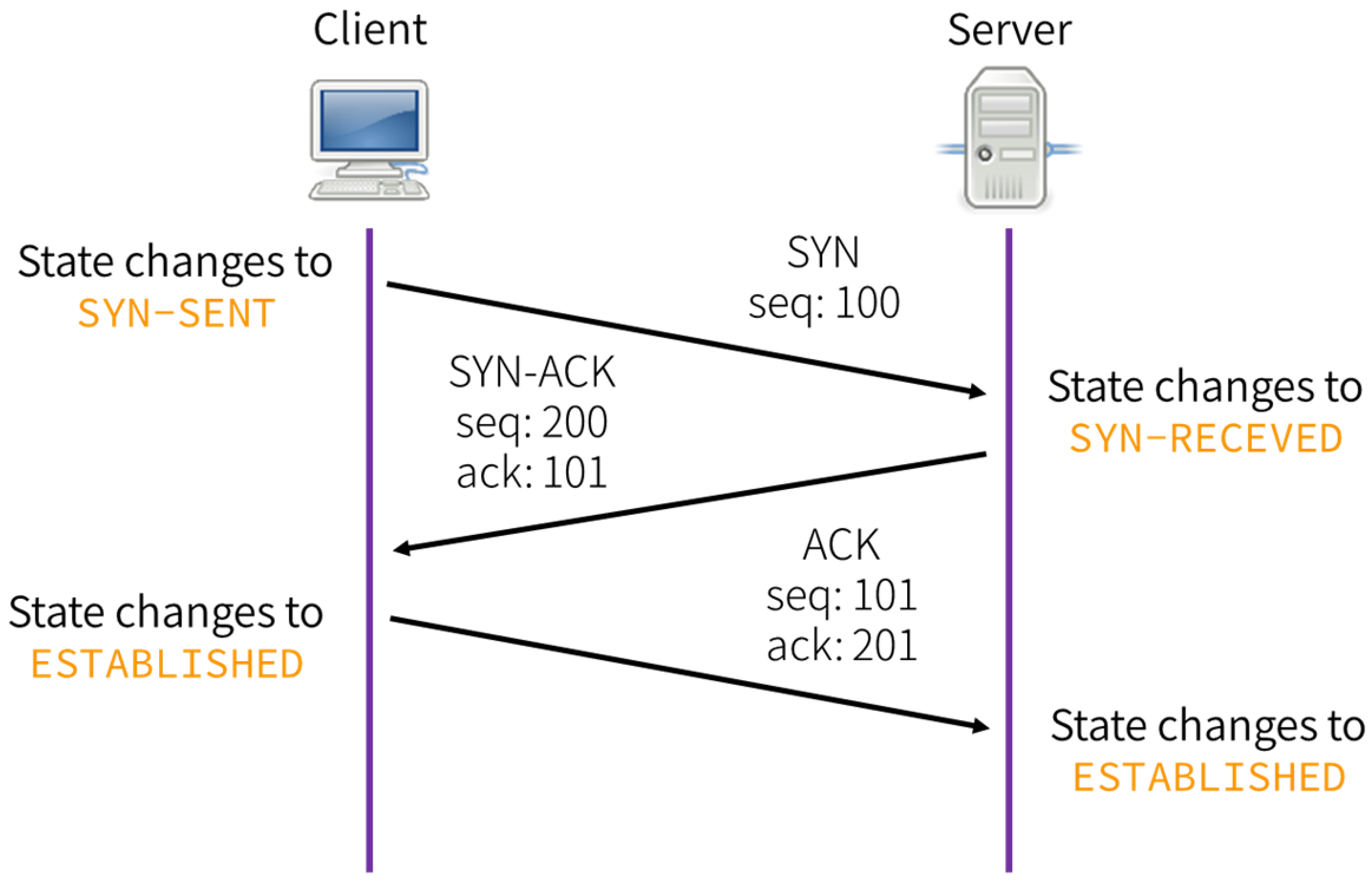

TCP 3 way handshake - source: mdpi.com 🔗

TCP 3 way handshake - source: mdpi.com 🔗

In the beginning of any new connection to a server, there is a process called 3 way handshakes between the client and the server in order to initiate the connection and SSL, the server is supposed to send the required information or data to client but server sometimes needs to send a bigger file than the client’s bandwidth based on the client request and server would be waiting for the acknowledgement of the sent part to continue if the ack get delayed and server sends more parts this will cause congestion, for that we need a congestion control not to lose any data and to decide what are the best sizes of pieces of that file to be sent each time with keeping your internet speed at its max, how that server and client start communicating about that after the TCP 3 way handshakes to check in a slow way as explained below.

Slow start Algorithm

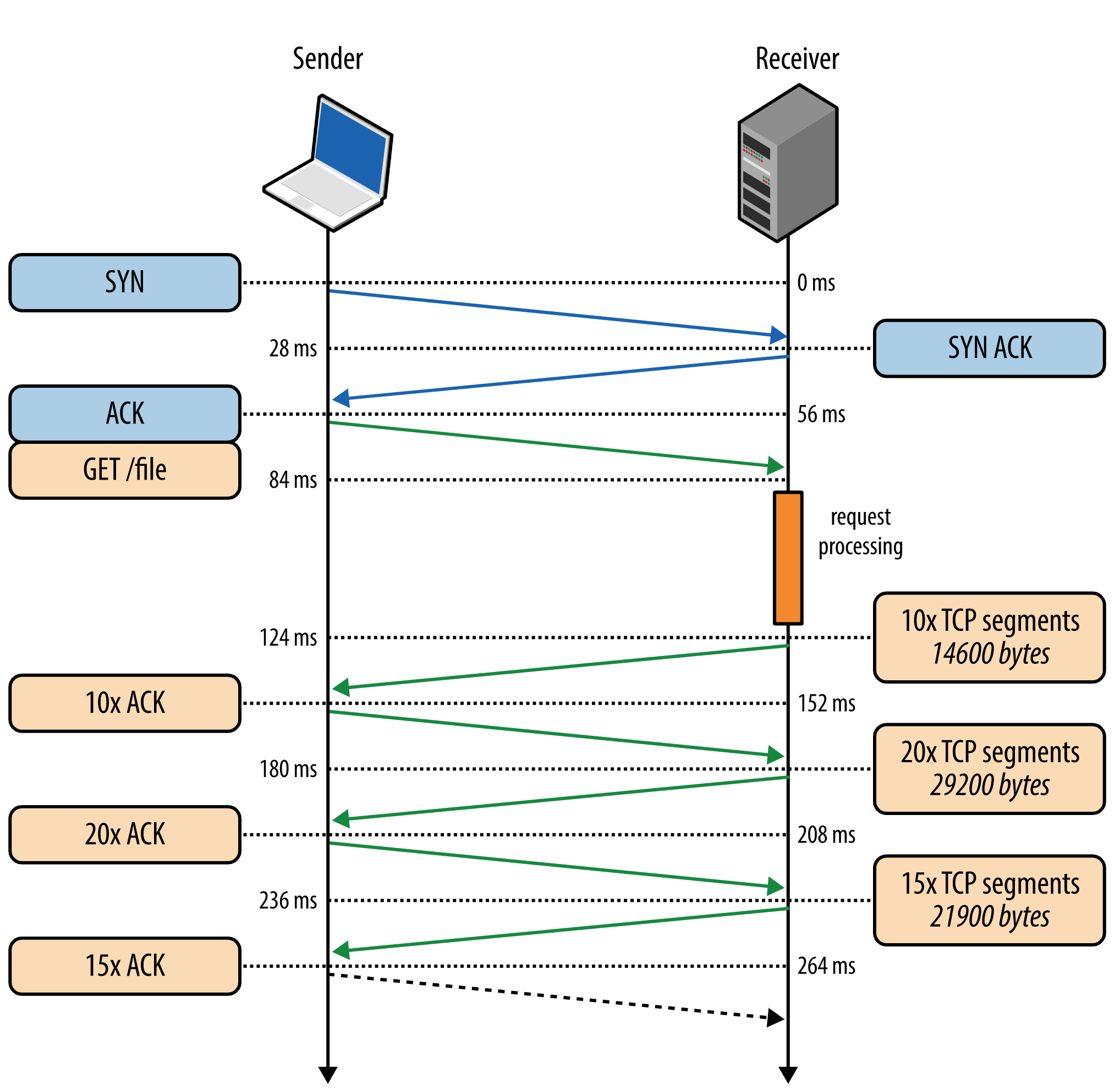

Slow start is an algorithm used by servers in order to gradually detect the limit of clients’ bandwidth and inform client about their bandwidth as well, simply the server tend to send a very small amount of bytes after the first TCP hand shake called congestion window size (cwnd), this amount used to be 4 segments (RFC 2581 in April 1999) which are around 6kb but now (and since RFC 6928 in April 2013) in most servers they send 10 segments in the beginning which is equal to around 14kb after that the server wait for acknowledge from the client about receiving the sent amount and acknowledge about the ability to get more

slow start algorithm - source 🔗

slow start algorithm - source 🔗

After each round trip acknowledgement from the client that still the bandwidth could accept more, the number of packets double, so in the second time for example will be 2 packets (20 segments) and so on until the serve client send that we are losing some packets (which is ok) and send an acknowledge with less accepted packets in this case the server shrinks the amount sent to match the max or the requested file is complete so acknowledge only with what the client has received, the importance of this mechanism is recognising the biggest bandwidth window in both sides (the server and the client) and avoid packet loss.

Inline CSS: You might be familiar with the famous advice to load the critical CSS in the head of the HTML. This’s exactly because of the slow start we need to make sure that the CSS has been loaded early enough (first 14kb) so we avoid any reflows or flickering instead of loading it later especially in high latency connections.

Slow start restart (SSR)

TCP usually will reset the congestion window size if the connection is idle for a while, so it starts again from the minimum size around ~14kb, this is called Slow Start Restart and it is commonly happening for the long time connections, that’s why it is recommended to disable SSR on server in that particular case to avoid bad performance using the following command:

> sysctl -w net.ipv4.tcp_slow_start_after_idle=0Reusing the same connection whenever possible is a good strategy in order to avoid an SSR or any latency.

How to optimize for Slow Start

First you need to know that slow start affect both heavy and light website, it doesn’t matter how big are the files or small, it will always in the first connection start from the smallest window size and keep scaling as explained.

Shipping less code while possible is a very good strategy in order to optimise for that, SEO as well benefit from that as the first loaded view is being cached in search engines in most cases and you need to ship what matters first as soon as possible.

Final performance thoughts:

All the info you just read should be very useful for working on web performance from the network side, and here is a list of the advices I see useful in order to optimise the TCP congestion control:

- Send fewer bits: no bit is faster than on that is not sent

- Use CDNs: you can’t make the slow start faster but we can control the latency a bit by moving server closer to client so we can minimise the round trip time

- Reuse TCP connections: as you have seen establishing a new connection is costy on performance

- Update server kernel always: this will ensure that server initial cwnd is 10 segments and enable window scaling

- Disable SSR: make sure to disable the slow start restart

- Compress transferred data: at least this will ensure that each segment has most of the requested data to avoid the latency of acknowledgement.

Tot ziens 👋