In this new post in Performance, I want to focus on a very vital reason for a poor performance web app which is fetching data performance. It seems for a while to be a server issue but in fact a big part of it is in your bucket as a FE Engineer.

Fetching data waiting-time is a very important factor in a good or a bad performant web app, in this post we are going to focus on some techniques that you can use in your react application or with any other framework to mitigate the impact of low performant requests.

What is the Problem?

let’s assume in the beginning that you have a web application that makes a bunch of different requests to the server, and I will assume also that some of them are too big and take too long time.

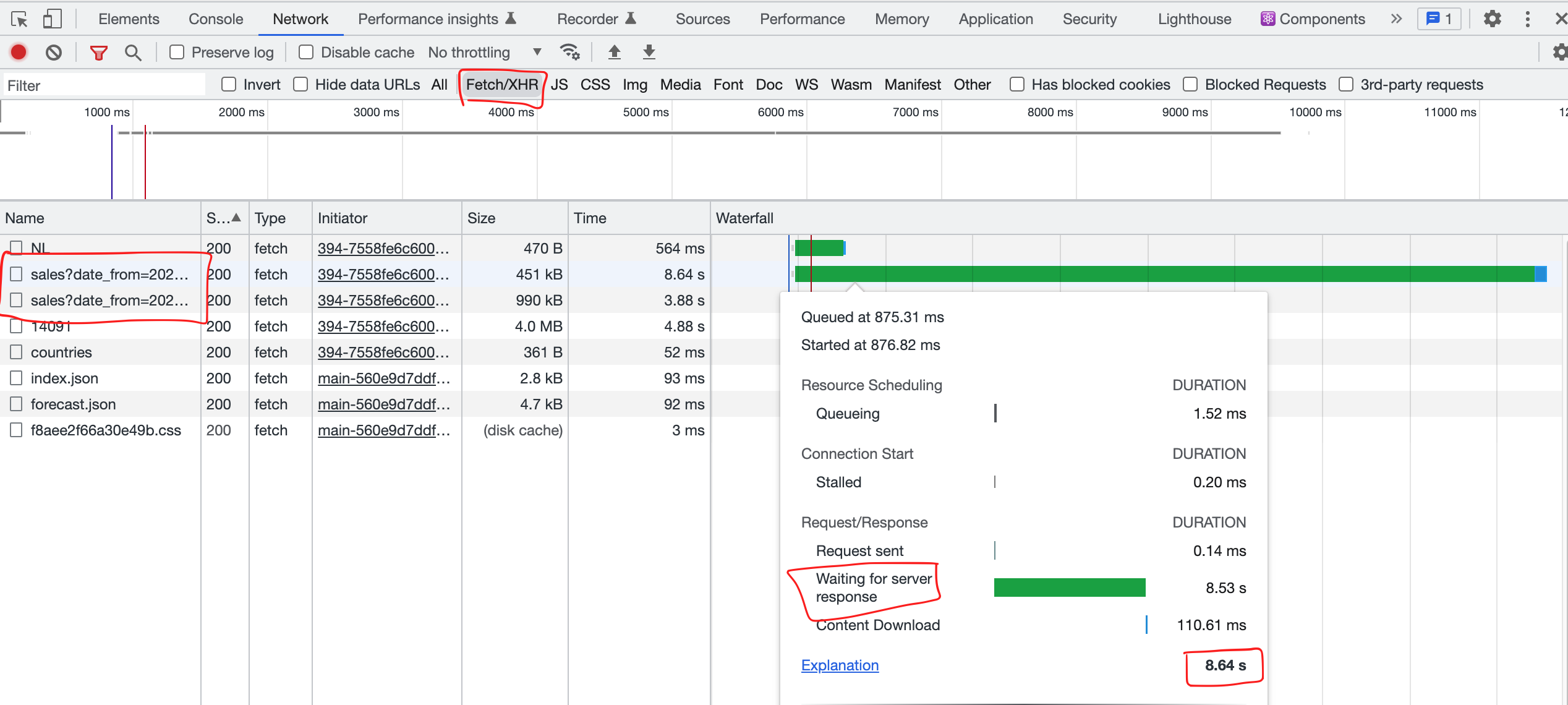

Let’s have a look into a project that is loading a huge amount of data on page load, open the network tab in your developer tools (mac: CMD + i or windows: CTRL + i), from there you will see this view:

We have several ways to enhance this bad waterfall in the right hand side, it can be because of the server latency by the way, but that is not really the only reason, it can also be that the response is too big with no compression or not enough compressed for example using Brotli 🔗 instead of Gzip 🔗 might help in some cases. Now, let’s talk about several potential reasons and the way to fix each of them.

Using Cache

Well, the first thing that might come to your mind is to cache responses for future usage, which means that the first load only can take long time and after that each load will be from cache, Good idea!

First, it is very important to understand how cache works in order to keep the important parts and load them from cache whenever possible.

We have multiple types of caching that we can use HTTP cache or Memory caching, for this case HTTP cache 🔗 might make more sense, and briefly it works as follow:

User request the data for the first time and it gets cached after that and each time the user make the same request the browser checks first if it has a cached version of that request and not stale if yes respond with it and if not make the request normally then cache it.

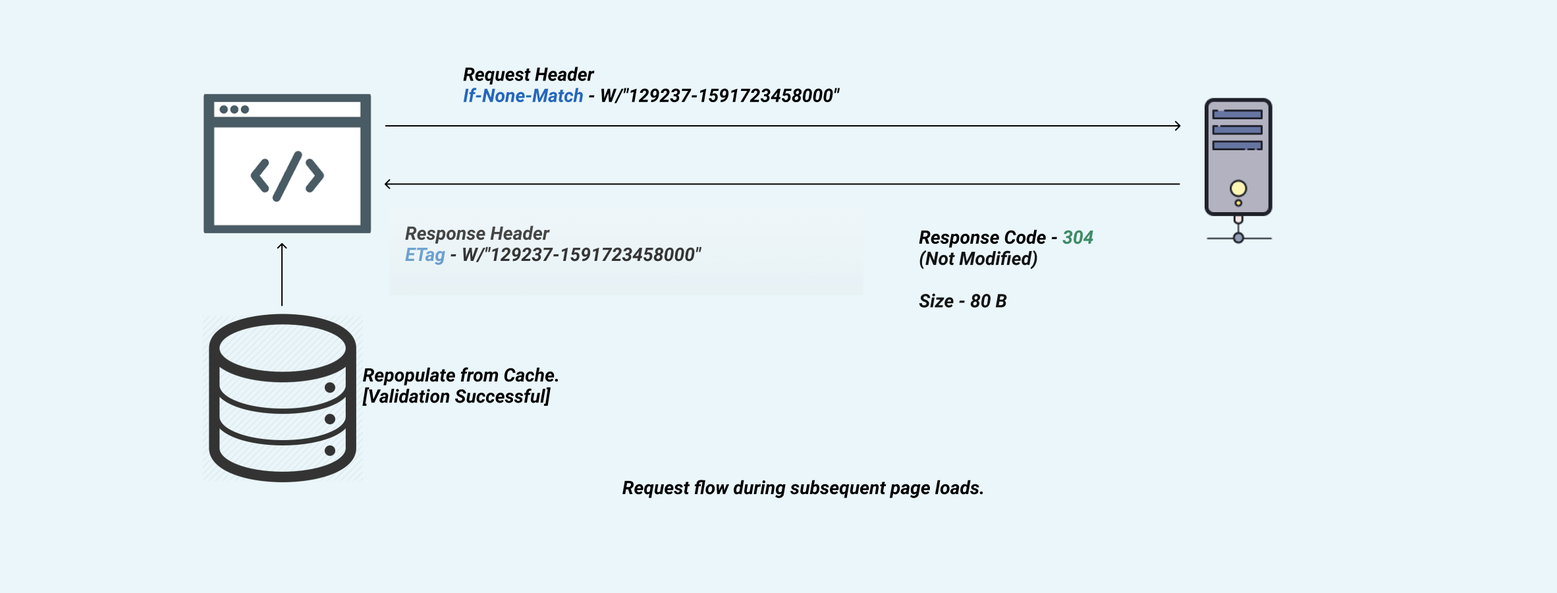

Hence: the ETag 🔗 is controlled by the server, the browser sends the value of the ETag with the If-Match 🔗 or If-None-Match headers and the server found that the value has been changed, then this cache is stale and a full request is being made and get cached with a new ETag value sent from the server, and if it is cached and ETag hasn’t been changed it respond with a small response 304 which is Not Changed.

A final note on that is using any library like react-query 🔗 or SWR 🔗 would help as well as both are serving the cached data immediately and in the back ground validate the result, implementing something called Stale While Revalidate 🔗 it worth reading about it.

Manage wasted re-renders

In modern frontend framework, it is very tricky to prevent a re-render, of course it is best not to have any wasted re-renders, but preventing that in big applications with a complex architecture is insanely hard, what we can do is to mitigate the effect of re-render or at least control what is going to run on re-render.

let’s take React 🔗 as an example in this case, when you use useEffect it brings a lot of side effects especially when you write big logic inside it what requires you to add too much dependencies, and the more the dependencies the more the probability to re-render.

As we are talking today about the fetching performance, let’s focus on the functions that call a server request, as you can see in the example below:

const fetchPosts = async () => {

const posts = await fetch('http://example.com/api/posts');

return posts.then((res) => res.json());

};if you use this function inside a useEffect this means that you will need to add it to the dependencies of it as below:

useEffect(() => {

(async () => {

const posts = await fetchPosts();

console.log(posts);

})();

}, [fetchPosts]);so every time this fetchPosts function in this case will change on a re-render this code inside the useEffect will run, which means more wasted requests to the server, you might be wonder why the function is considered changed since it is not going to change? and it is a good concern, let me explain

Functions are objects so function != function as they refer to the reference in memory and every time you call this component this is a new version of the same function with a new ref so it re-render.

But there is a simple solution provided in the React Hooks as well which is useCallback hook, it is simply prevent same function from being recreated on component re-render and kind of memoize 🔗 it, so in this case the same function has the same ref and function == function so this would not cause a re-render, finally better to write the same function as follow:

const fetchPosts = useCallback(async () => {

const posts = await fetch('http://example.com/api/posts');

return posts.then((res) => res.json());

}, []);so the main take away here is to wrap all functions that are used as dependencies or being used as well as props for other components inside a useCallback hook to prevent wasted rerender and avoid busy server.

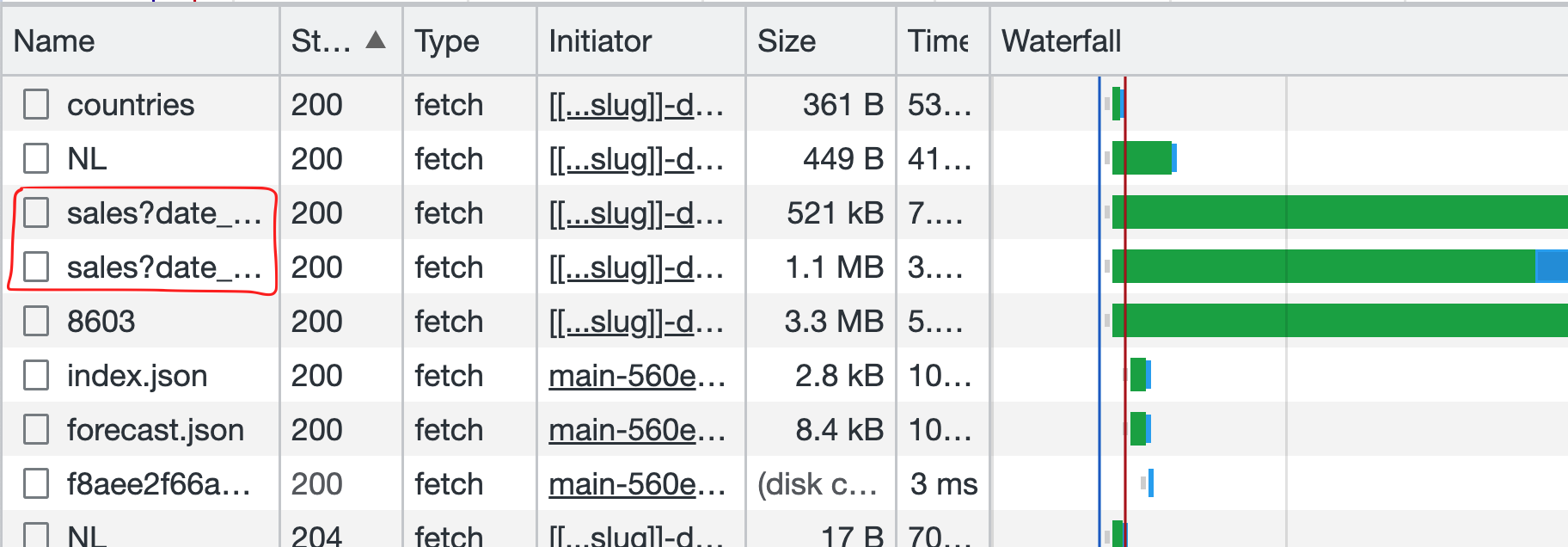

Double (or more) calls to endpoints

A lot of times you might find in the network tab for each normal call 2 or more calls are made, and you might have done your best to prevent all wasted rerender

Double calls does not only keep your server busy for no reason it also make some unnecessary loading state or flickers to the UI, this is really annoying UX and an obvious waste of the resources. Now this turns you (and me) crazy and you might have no idea what to do, but still there is a solution for that.

For instance, if you are implementing a search with type ahead and you are making a request on user input change, you can make a debounce effect which can help of course but what if the search request took longer than the debounce duration? you might end up having multiple requests pending in the same time, and on fulfilling each of them you get different results in the page, this is another issue that cannot be fixed only by debounce or prevent wasted rerenders, and you mainly want the latest request to continue and ignore earlier ones.

Here comes the solution for that exact problem, there is a great browser API called AbortController 🔗 that can help massively in resolving that (the good news is that it is supported in all browsers 🔗), it basically helps in sending a signal to a running request telling it to stop is some condition so if we have double or more calls, we can cancel the one that started earlier every time there is a new request.

Implementing that is very easy as the fetch API supports that out of the box, by sending a property in the options called signal with the AbortController instance you can use it to abort the request any time, here is an example:

const controller = new AbortController();

const signal = controller.signal;

setTimeout(() => {

controller.abort();

}, 3000);

await fetch('http://example.com/api/search', { signal });the code above is running a fetch request and passed a signal holding the AbortController signal created, and after 3 seconds if the fetch request is not fulfilled yet abort it using the controller, and will appear in the devtools as that request was cancelled or aborted, that’s it same request will never be called twice or more.

Nice to mention: I used this technique recently in an internal project at my client and that request was fetching a huge amount of data that takes already about 7 seconds to load, notices the problem of double calling and by using this technique I was able to save about 4 seconds from the loading time, that was Huge!

Lazyloading heavy parts

You might also think about lazy-loading, because what usually matters the most is the viewport (which is the part that the user see the first time they land on the page) what is below that part is not that important on the first load and you can lazyload them without a problem to the UX until the user intent to see this part by scrolling or hovering.

It would be best if you could paginate the results if the list is too long, but if that is not possible delay that request until the user scroll to it, you can still keep a good experience by using a loader indicator or a skeleton design

by doing that you are minimizing the effect of loading a big amount of data into the page while the user might not scroll to see them at all.

An example of doing that in React for instance is by splitting your components and make the component that will take longer to load and are down in the page (outside the viewport on load) imported using React.lazy and load it inside a Suspense component so you can easily provide a fallback and lazy load the entire component, as follow:

// components/List.tsx

export default function List() {

const [posts, setPosts] = useState([]);

useEffect(

() =>

(async () => {

setPosts(await getPosts());

})(),

[]

);

return (

<>

{posts.map((post) => (

<h1>{post.title}</h1>

))}

</>

);

}// components/App.tsx

const List = React.lazy(() => import('~/components/List.tsx'));

export default function App() {

return (

<>

{/* the top viewport with other random data and a hero image */}

<Suspense fallback="loading...">

<List />

</Suspense>

</>

);

}In the example above the list will be loaded lazily only when appearing in the viewport, and until loading the list of data we don’t hurt the user experience and we show a friendly message saying loading...

good to mention as well that Suspense can catch any lazy component inside it, so you can add multiple ones inside the same Suspense component or even you can nest suspense inside another one to show different messages.

by that you prevent fetching data if the user doesn’t really need it at the moment of loading.

Important note: If you are loading that component above the fold, meaning in the viewport of the first load, Don’t lazyload it as this might hurt the performance instead of enhancing it, think about the Core Web Vitals 🔗, this will make the LCP takes too long and could affect the FID as well

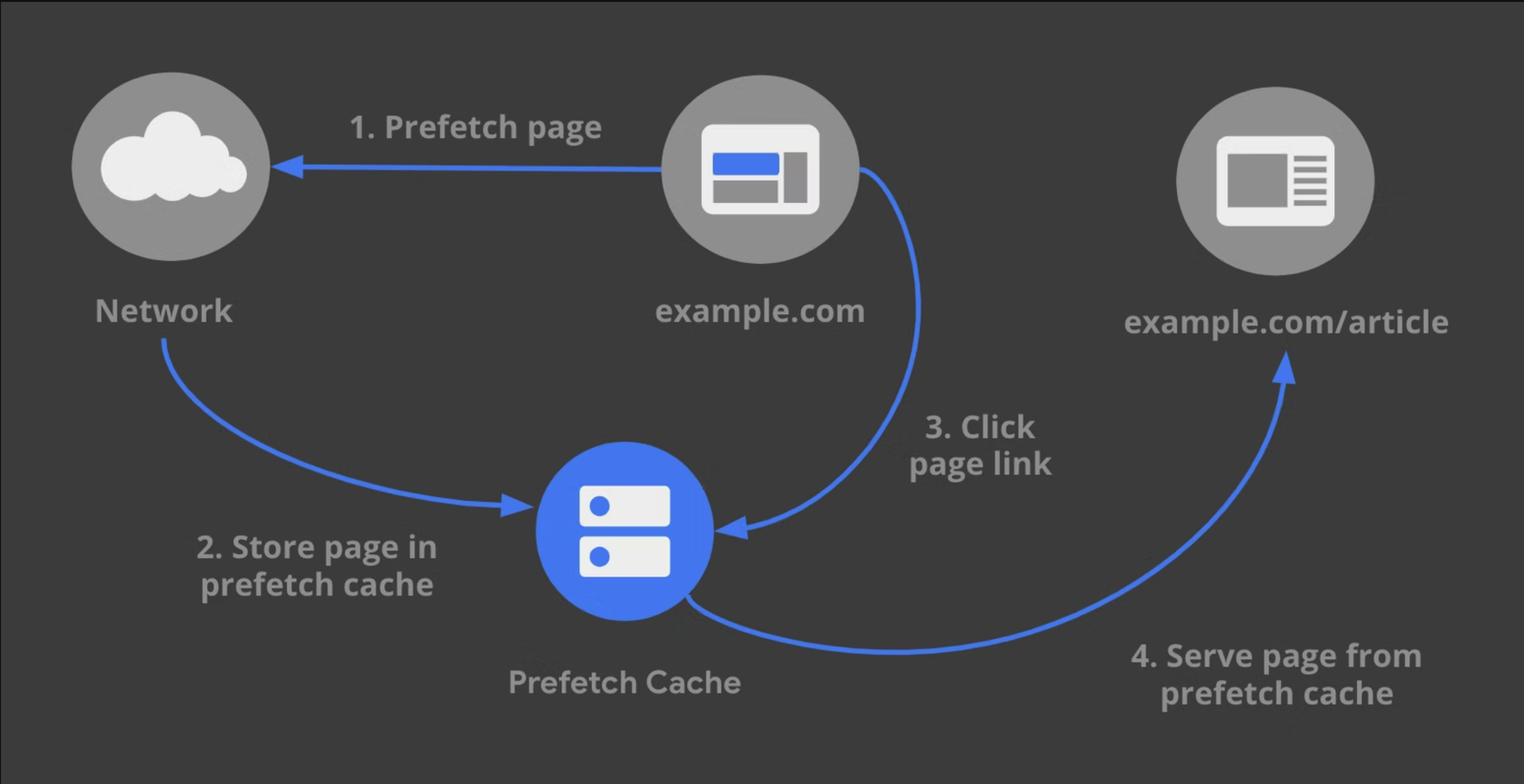

Prefetching resources

Prefetching 🔗 is one of the best things that can enhance fetching performance, if you have other routes that have heavy fetch and the link appears in the view port you can prefetch resources of that page ahead and cache it using the HTTP cache with a low priority, so when the user clicks on that link it would be like instant with no waiting time fetching resources

This is super easy to apply in your SPA and here is a nice article by Addy Osmani 🔗 explaing a tool called Quicklink that helps you do that easily in React (only with react-router)

Good to mention that if you are using any of the modern frameworks like Nextjs 🔗, Remix 🔗, or Nuxt 🔗 if you are using Vue, they provide a component called Link that does this job for you with some options and defaults, what is very interesting the component in Remix that has an option called intent which start fetching the link content as soon as the user hover over it which makes it feel like snappy, check the links for more information.

Conclusion

Well, the main takeaways from this article to get a better data fetching pref:

- Always watch the

Network tabin your application (water falls) - Understand

cacheand use it wisely - Use react

hooksor VueCompositionApiwith caution - Prevent double or more calls using

AbortController - Try to

lazyloadthe data fetching heavy parts if it is outside the view port - Prefetch resources and use the

Linkcomponent in modern frameworks. - Keep measuring performance

That’s it, I hope that was helpful for you in enhancing your project performance somehow, please Tweet me 🔗 any question or comment about this post.

Tot ziens 👋